𝐐𝐔𝐀𝐍𝐓𝐔𝐌 𝐀𝐈, 𝐓𝐇𝐄 𝐑𝐈𝐒𝐄 𝐎𝐅 𝐇𝐘𝐁𝐑𝐈𝐃 𝐈𝐍𝐓𝐄𝐋𝐋𝐈𝐆𝐄𝐍𝐂𝐄

The artificial intelligence revolution that has dominated headlines since ChatGPT's emergence may soon appear, as predicted, like "child's play." Not because AI has failed to deliver transformative capabilities, but because an even more profound technological convergence is materializing at the intersection of quantum computing, artificial intelligence, and biological systems. As of October 2025, a series of groundbreaking demonstrations from laboratories spanning Denmark to Chicago, Paris to Australia, suggest we are witnessing the opening act of what could be the most consequential technological transformation of the 21st century, one that will fundamentally reshape not only how we build AI systems, but whether the massive data centre infrastructure underpinning today's AI boom will remain economically viable in its current form.

Between August and September 2025 alone, the quantum computing field achieved three milestone breakthroughs that collectively signal an inflection point. Alice & Bob, a French quantum computing startup, demonstrated superconducting "cat qubits" maintaining bit-flip stability for over an hour, shattering the previous record of seven minutes and exceeding by fourfold their own 2030 roadmap targets. The Technical University of Denmark's bigQ centre proved a definitive quantum learning advantage, reducing a machine learning task from an estimated 20 million years on classical hardware to 15 minutes using entangled photonics. Most remarkably, researchers at the University of Chicago's Pritzker School of Molecular Engineering transformed a fluorescent protein found in living cells into a functioning quantum bit, opening unprecedented possibilities for quantum sensing within biological systems and bridging the once-insurmountable divide between quantum physics and molecular biology. These are not incremental improvements—they represent categorical leaps that validate decades of theoretical work and position quantum-enhanced AI as imminent rather than aspirational. https://alice-bob.com/newsroom/alice-bob-surpasses-bit-flip-stability-record/

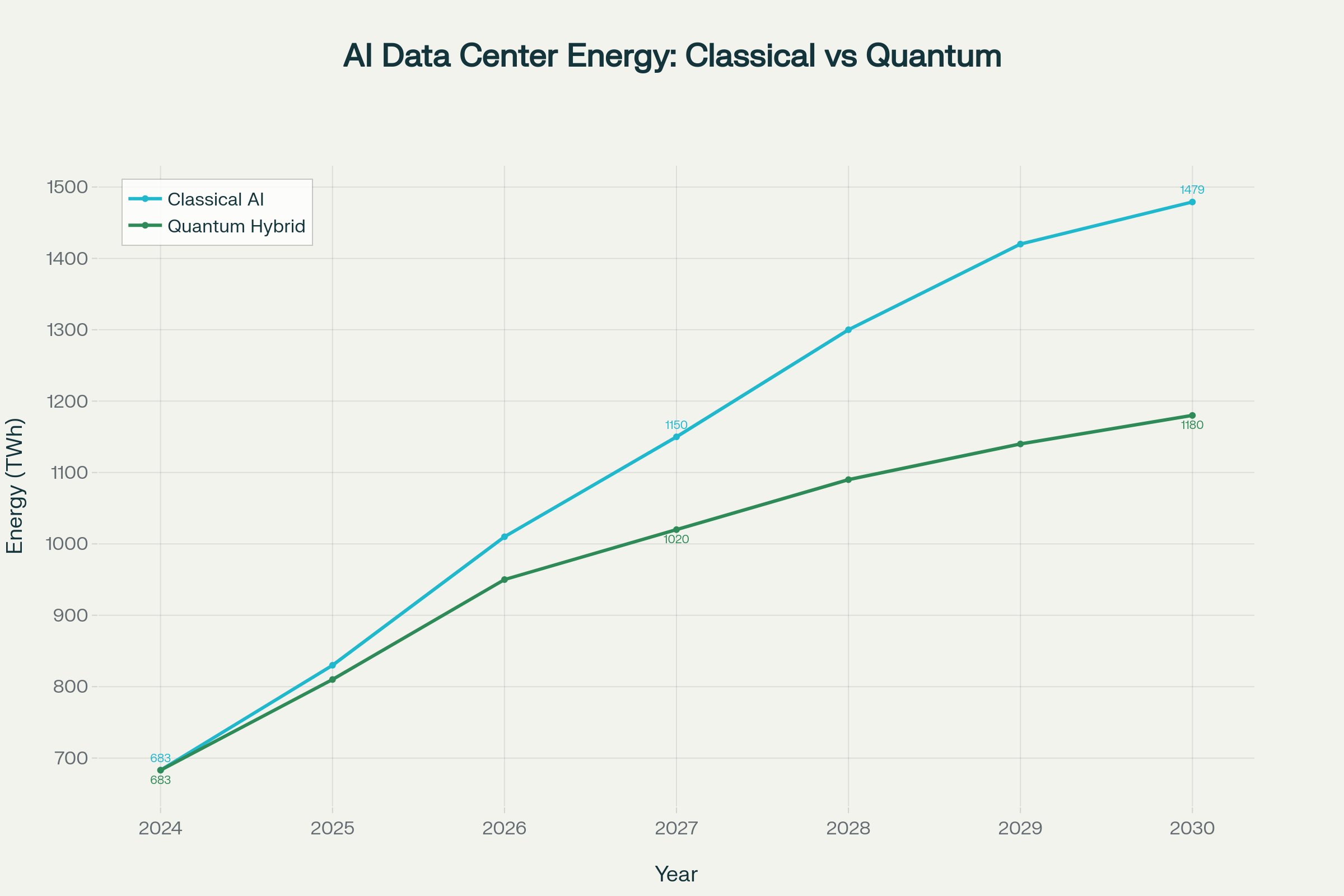

Projected energy consumption for AI data centres shows quantum-hybrid systems could reduce power demand by over 20% by 2030, potentially saving 299 TWh annually—equivalent to the electricity consumption of a mid-sized country - Amazon AWS

The Quantum Advantage: From Theoretical Promise to Demonstrated Reality

For years, quantum computing's practical utility has been debated in academic circles and dismissed by sceptics as perpetually "20 years away." Those dismissals now ring hollow. In 2025, D-Wave Systems achieved genuine quantum supremacy through quantum annealing, demonstrating that their Advantage2 quantum computer could simulate complex quantum dynamics in minutes—calculations that would require the world's fastest supercomputer at Oak Ridge National Laboratory nearly one million years to complete with equivalent accuracy, consuming more electricity than the entire world generates annually. This wasn't a carefully contrived mathematical problem designed to showcase quantum superiority; it was a practical simulation of spin glass dynamics with direct applications to materials science and optimization problems that bottleneck classical AI systems.

The implications ripple across the entire AI ecosystem. IonQ and Kipu Quantum solved the most complex protein folding problem ever executed on quantum hardware - a three-dimensional structure involving up to 12 amino acids - achieving optimal solutions across all test scenarios using IonQ's Forte trapped-ion quantum systems. Protein folding represents one of biology's most computationally intractable problems, yet it is fundamental to drug discovery, enzyme design, and understanding disease mechanisms at molecular levels. Classical supercomputers struggle with proteins of this complexity; quantum systems handle them routinely. When combined with AI's pattern recognition capabilities, quantum-accelerated protein simulation could compress drug discovery timelines from years to months, potentially saving millions of lives and hundreds of billions of dollars.

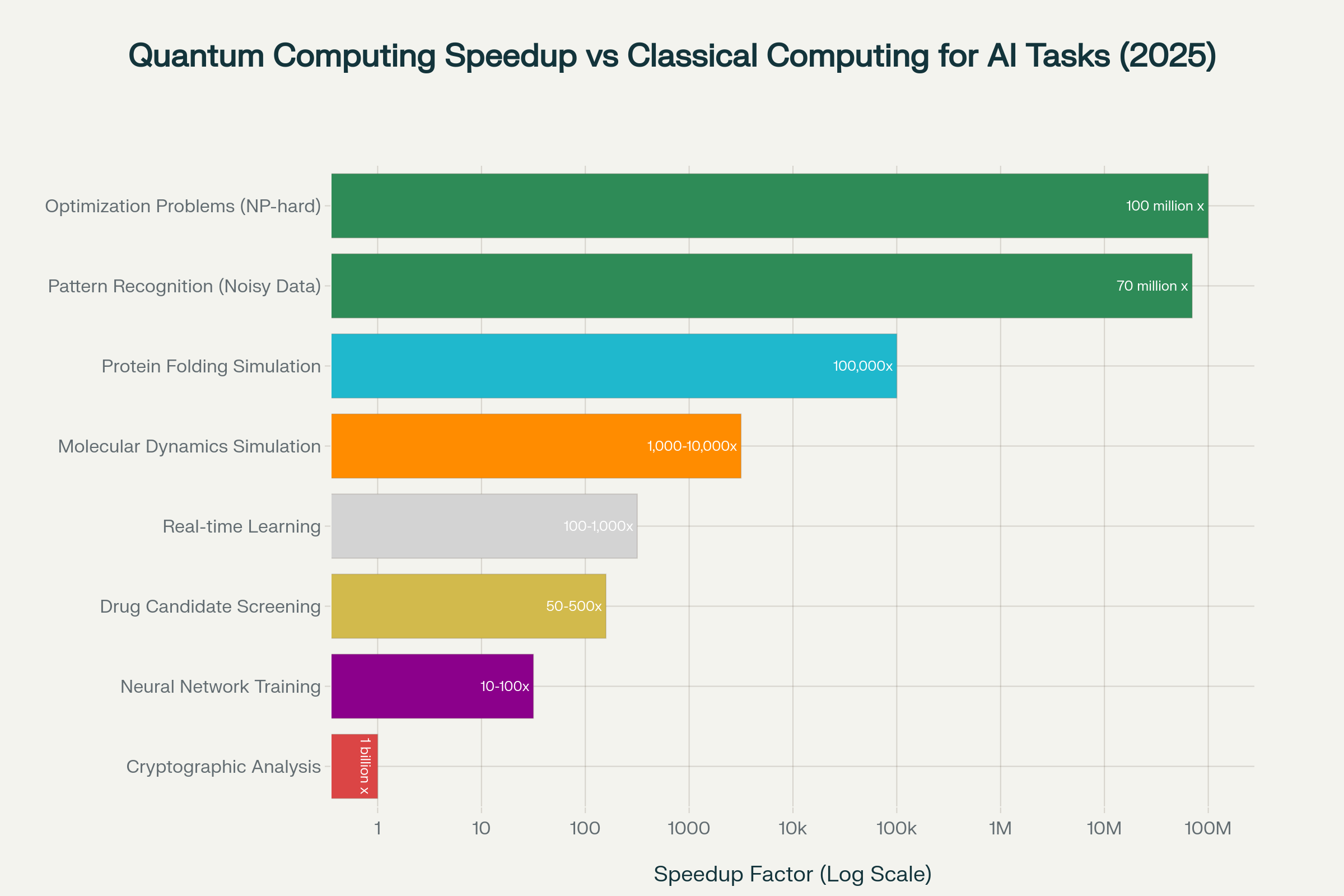

Quantum computing demonstrates exponential speedups across key AI workloads, with the most dramatic improvements in cryptographic analysis (1 billion x), optimization problems (100 million x), and pattern recognition in noisy data (70 million x)

Perhaps most significant from a theoretical standpoint, researchers at the University of Texas Austin and quantum computing firm Quantinuum achieved what they term "unconditional quantum information supremacy", a permanent, mathematically proven demonstration that quantum systems can outperform any classical computer, regardless of future algorithmic improvements. Unlike Google's controversial 2019 quantum supremacy claim, which IBM challenged by showing the calculation could be optimized for classical hardware, this result cannot be circumvented. The separation between quantum and classical capabilities is provable, establishing that Hilbert space - the vast mathematical framework describing quantum systems, represents a genuine physical resource that quantum computers uniquely access.

Artificial Intelligence's Computational Bottleneck

To understand why quantum computing matters for AI, we must first acknowledge AI's fundamental limitations. Large language models like GPT-5, Claude, and their successors are trained on hundreds of billions of parameters using gradient descent optimization across massive datasets, a process that demands staggering computational resources. Training GPT-3 reportedly consumed 1,287 megawatt-hours of electricity and cost approximately $4.6 million in cloud computing resources. Each ChatGPT query consumes nearly 10 times the electricity of a Google search and generates approximately 4.32 grams of CO2 emissions. As AI capabilities scale, these demands grow exponentially.

Current projections estimate that AI data centres will consume 1,479 terawatt-hours of electricity annually by 2030, more than double the 683 TWh consumed in 2024. This represents approximately 9% of total U.S. electricity consumption and carries profound implications for grid stability, carbon emissions, and the economic viability of continued AI scaling. The International Energy Agency projects global data centre energy consumption could increase between 35% and 128% by 2026, with AI workloads accounting for 10-20% of power draw. Barclay's Research estimates that U.S. data centres alone could require above 5.5% of national electricity by 2027, escalating to more than 9% by 2030.

These aren't abstract statistics; they represent physical constraints on AI's continued evolution. Training increasingly sophisticated models demands proportionally greater energy, and the laws of thermodynamics impose hard limits on classical computing efficiency. The Landauer limit defines the minimum energy required to process one bit of information at a given temperature; we are approaching those theoretical boundaries. Without a fundamentally different computational paradigm, AI scaling will eventually hit a wall defined not by algorithmic ingenuity but by basic physics and economic feasibility.

Quantum Machine Learning: The Computational Escape Velocity

Quantum computing offers precisely the paradigm shift required to circumvent classical AI's bottlenecks. Quantum machine learning (QML) algorithms leverage superposition, entanglement, and quantum interference to process information in ways classical systems fundamentally cannot replicate. The DTU quantum learning advantage demonstration provides the clearest empirical validation: by using entangled light to characterize noisy quantum channels, researchers achieved a speedup factor of approximately 70 million compared to classical approaches. The task involved learning a system's "noise fingerprint" exactly the type of characterization required for training robust AI models in real-world conditions where data is inevitably corrupted by measurement uncertainty and environmental interference Variational quantum eigensolvers (VQEs) and quantum approximate optimization algorithms (QAOA) represent the near-term workhorses of quantum-enhanced AI. VQEs are hybrid quantum-classical algorithms designed to find ground state energies of molecular systems, precisely the calculations required for drug discovery, materials science, and understanding biochemical processes. Traditional density functional theory (DFT) calculations scale poorly with system size and often produce inaccurate results for complex molecules. VQE achieves chemical accuracy for small systems even on today's noisy intermediate-scale quantum (NISQ) devices and promises clear scaling pathways as quantum hardware improves.

QAOA addresses combinatorial optimization problems, the NP-hard challenges that plague logistics, supply chain management, financial portfolio optimization, and neural network architecture search. D-Wave's quantum annealing systems have demonstrated 100 million-fold speedups for specific optimization problems. When applied to AI hyperparameter tuning—selecting optimal learning rates, network architectures, and regularization parameters, quantum annealing could reduce optimization cycles from weeks to minutes, dramatically accelerating model development. Early results suggest quantum annealing can accelerate AI hyperparameter optimization by factors exceeding 100x, transforming what is currently the most time-consuming bottleneck in model development into a near-instantaneous process.

Quantum neural networks (QNNs) represent an even more radical reimagining of AI architecture. Theoretical analysis from Google Quantum AI suggests QNNs could learn certain types of neural networks exponentially faster than classical gradient-based methods, particularly for periodic neurons and functions common in machine learning tasks. More provocatively, some research indicates QNNs might train deep learning models using only 1% of the data required by classical methods, extracting patterns from datasets that would be statistically insignificant for conventional approaches. If validated at scale, this capability would democratize AI development by eliminating the massive data collection requirements that currently favour well-resourced technology giants. https://briandcolwell.com/quantum-annealing-in-2025-achieving-quantum-supremacy-practical-applications-and-industrial-adoption/

The Data Center Consolidation Hypothesis

These quantum advantages carry profound implications for AI infrastructure. If quantum-enhanced AI can achieve comparable or superior performance using exponentially less energy, the economic case for today's massive data centre buildout begins to crumble. Consider the arithmetic: researchers at Cornell University developed a quantum computing framework for AI data centres that reduced energy consumption by 12.5% and carbon emissions by 9.8% using current NISQ-era quantum hardware operating in hybrid mode with classical systems. As quantum technology matures toward fault-tolerant systems with hundreds of logical qubits - expected within 5-7 years according to industry roadmaps - these efficiency gains will compound.

Photonic quantum processors, which operate at room temperature and generate virtually no heat, offer particularly compelling advantages for data centre integration. Q.ANT's Native Processing Server, deployed at the Leibniz Supercomputing Centre in July 2025, promises up to 90x lower energy consumption and 100x greater data centre capacity compared to conventional GPU clusters for AI and simulation workloads. Unlike superconducting quantum processors requiring cryogenic cooling to near absolute zero temperatures, photonic systems eliminate costly cooling infrastructure while delivering comparable computational advantages.

Indonesia and several other Asia-Pacific nations are already constructing quantum-AI hybrid data centres specifically designed to leverage these efficiencies. Digital Realty and Oxford Quantum Circuits partnered with NVIDIA to launch the first quantum-AI data centre in New York City in September 2025. These facilities represent early experiments in a fundamentally different computing architecture where quantum processing units (QPUs) function as specialized accelerators alongside traditional CPUs and GPUs - much as GPUs revolutionized AI training by accelerating specific matrix operations, QPUs will handle quantum-native tasks like molecular simulation, optimization, and certain pattern recognition problems orders of magnitude more efficiently than any classical system.

The implications for existing data centre infrastructure are sobering. If quantum-hybrid architectures can deliver equivalent or superior AI capabilities while consuming 20-30% less energy, the massive hyperscale data centres currently under construction may represent stranded assets within a decade. Alternatively, we may witness a wave of retrofit projects integrating quantum accelerators into existing facilities, fundamentally altering data centre design principles around hybrid classical-quantum architectures. Either scenario suggests the AI data centre boom of the early 2020s will not continue linearly - a technological discontinuity is approaching that will force infrastructure reallocation at massive scale. https://www.datacenters.com/news/quantum-computing-the-next-data-center-revolution

The Quantum-Biology Convergence: When Physics Meets Life

Perhaps the most philosophically profound development is the emerging convergence between quantum computing, AI, and biological systems. The University of Chicago's creation of protein-based qubits opens possibilities that blur distinctions between living systems and quantum technologies. These fluorescent protein qubits can be genetically encoded directly into cells, positioned with atomic precision, and used as quantum sensors detecting signals thousands of times stronger than conventional quantum sensors. Because they're built by cells themselves rather than engineered in fabrication facilities, protein qubits offer unprecedented opportunities for studying biological processes at quantum resolution while operating at room temperature within living tissues.

Philip Kurian's Quantum Biology Lab at Howard University has demonstrated experimental evidence that biological systems naturally exhibit quantum effects, including "single-photon superradiance", synchronized light emission from molecular groups producing stronger, faster energy bursts than individual molecules could generate. Contrary to conventional wisdom that quantum coherence requires ultra-cold isolation, certain biological structures maintain quantum effects at physiological temperatures through hierarchical symmetries that protect and sustain quantum behaviour. This suggests life itself may have evolved quantum information processing capabilities- and that we can learn from nature's 3.8 billion years of quantum engineering to build more robust quantum technologies.

Researchers at Tufts University and collaborators in Japan are developing bio-computers based on the slime mold Physarum polycephalum, which solves optimization problems like the traveling salesman problem with surprising efficiency. The organism evaluates tens of thousands of paths in near-linear time as problem size increases, computational performance that raises questions about whether biological systems exploit quantum algorithms "under the wetware hood". If validated, this would fundamentally challenge our understanding of computation's boundaries and suggest that quantum-biological-AI convergence represents not merely a technological development but a conceptual revolution in how we understand intelligence itself.

Quantum-enhanced AI systems could integrate real-time biological data from protein qubits positioned within cells, creating adaptive models that respond dynamically to cellular processes at quantum resolution.

This capability would revolutionize drug development, enabling AI models to observe how candidate molecules interact with living cells at the quantum level, predict side effects with unprecedented accuracy, and optimize treatment protocols based on individual patients' cellular quantum signatures. Kipu Quantum and Pasqal's collaboration on drug discovery already demonstrates quantum computing's ability to analyze protein hydration and ligand-protein binding with accuracy impossible for classical systems. When combined with AI's pattern recognition and quantum computing's simulation capabilities, we approach a future where personalized medicine operates at the quantum-cellular interface.

Post-Quantum Security: The Cryptographic Reckoning

While quantum computing promises to supercharge AI capabilities, it simultaneously threatens the cryptographic foundations securing digital infrastructure. Shor's algorithm, when run on a sufficiently powerful quantum computer, can factor large numbers and solve discrete logarithm problems in polynomial time—rendering RSA, ECC, and other public-key cryptography systems that protect financial transactions, government communications, and internet security completely vulnerable. This isn't hypothetical; the threat is imminent enough that the U.S. National Institute of Standards and Technology (NIST) published three post-quantum cryptography (PQC) standards in 2024, with additional algorithms expected in 2025.

The "harvest now, decrypt later" strategy, where adversaries collect encrypted data today anticipating future quantum decryption capabilities is already underway. IBM's 2023 research found most organizational leaders expect the transition to post-quantum cryptography to take more than a decade, yet quantum computers capable of breaking current encryption may emerge 5-6 years before most organizations complete their transition. The Cloud Security Alliance strongly recommends enterprises achieve full quantum-readiness by April 14, 2030, a deadline now less than five years away.

AI systems will require comprehensive redesign for post-quantum security. Current AI platforms rely on encrypted communication channels, secure model updates, and protected training data—all vulnerable to quantum cryptanalysis. AI itself becomes both solution and threat: machine learning models can accelerate the design of quantum-resistant algorithms, but adversaries can equally deploy AI to discover vulnerabilities in post-quantum cryptography implementations. Meta AI and KTH researchers demonstrated that transformer models can attack "toy versions" of lattice-based cryptography even with minimal training data, exploiting side-channel vulnerabilities that leak information through power consumption traces.

Quantum key distribution (QKD) and quantum-secured networks offer long-term solutions by leveraging quantum mechanics itself for cryptographic security. QKD is theoretically immune to eavesdropping because any attempt to intercept quantum keys disturbs their quantum state, alerting communicating parties to the breach. AI developers can future-proof infrastructures by implementing QKD-secured channels protecting data exchanges between distributed AI models, federated learning nodes, and edge devices, essential security layers for AI operating in defense, finance, and critical infrastructure where quantum threats pose existential risks.

Timeline to Transformation: Nearer Than You Think

Industry experts increasingly converge on a 3-7 year timeline for quantum computing's practical impact on AI systems. Google Quantum AI's director of hardware Julian Kelly told CNBC in March 2025, "We think we're about five years out from a real breakout, kind of practical application that you can only solve on a quantum computer". Jensen Huang, NVIDIA's CEO, stated during his VivaTech 2025 keynote that "we are within reach of being able to apply quantum computing in areas that can solve some interesting problems in the coming years". McKinsey projects quantum computing revenue growing from billions in 2024 to as much as $72 billion by 2035, with early adopters in automotive, chemicals, financial services, and life sciences unlocking up to $1.3 trillion in value.

Alice & Bob's roadmap targets an early fault-tolerant quantum computer (eFTQC) with 100 logical qubits for materials science applications by 2030, now just five years away. IBM's plan aims for a large-scale, fault-tolerant quantum computer by 2029. Microsoft and Atom Computing are co-developing a 24-logical-qubit commercial system, with Microsoft achieving high-fidelity entanglement of 12 logical qubits with Quantinuum. These aren't aspirational research projects—they're commercial product roadmaps with concrete deliverables and customer commitments.

The hybrid classical-quantum computing architecture is already operational. The Poznan Supercomputing and Networking Center in Poland deployed the world's first multi-user, multi-QPU, multi-GPU quantum-integrated HPC cluster, allowing researchers to execute hybrid quantum-classical algorithms on multiple quantum processing units and GPUs simultaneously using standard HPC workload management systems. This demonstrates that quantum computing can integrate seamlessly with existing data centre infrastructure using familiar tools like Slurm schedulers and NVIDIA's CUDA-Q API, eliminating the perception that quantum requires completely novel facilities.

Venture investor Karthee Madasamy, whose fund backs PsiQuantum (valued at $3.15 billion in 2023), predicts commercial quantum applications within "a few years" and expects his portfolio company to go public in 2028. PsiQuantum's photon-based approach promises easier scalability than superconducting or trapped-ion systems, potentially accelerating adoption. Bain & Company's 2025 Technology Report concludes, "Working with companies to assess their quantum readiness, we've seen it takes three to four years on average to go from awareness to a structured approach that includes a strategic roadmap, an ecosystem of partnerships, and pilot programs". Organizations beginning quantum readiness initiatives today will be positioned to capitalize on quantum advantages as they materialize; those delaying face 3-4 year learning curves. https://thequantuminsider.com/2025/03/17/investor-says-quantum-computing-is-underestimated-likely-to-commercialize-in-a-few-years/

Strategic Imperatives: Preparing for the Quantum-AI Era

The convergence of quantum computing and AI demands immediate strategic action across multiple fronts. First, organizations must begin quantum readiness assessments now identifying which computational workloads could benefit from quantum acceleration, which cryptographic systems require post-quantum migration, and where hybrid quantum-classical architectures offer near-term advantages. The complexity of transitioning thousands of applications and services to quantum-safe cryptography alone justifies starting today; waiting until quantum threats materialize leaves organizations catastrophically vulnerable.

Second, investment in quantum talent and partnerships is essential. The quantum computing field suffers from acute talent shortages, with demand for quantum engineers, physicists, and algorithm developers far exceeding supply. Organizations that cultivate quantum expertise now through university partnerships, collaborations with quantum computing companies, or direct hiring—will dominate quantum-AI applications as they mature. Partnerships with firms like IBM, Google, Microsoft Azure Quantum, Amazon Braket, and specialized quantum startups provide access to cutting-edge hardware and reduce the barriers to quantum experimentation.

Third, hybrid computing strategies offer immediate value. Even today's NISQ-era quantum processors deliver measurable benefits for specific optimization, simulation, and machine learning tasks when integrated thoughtfully with classical systems. The Binary Bosonic Solver algorithm running on photonic quantum processors has solved optimization problems with up to 30 variables by dividing instances into subproblems processed in parallel. Quantum-enhanced hyperparameter optimization can accelerate model development cycles. Variational quantum algorithms reduce energy consumption for certain tasks by up to 90% compared to classical approaches. These aren't theoretical advantages- they're practical efficiencies achievable today with commercially available quantum cloud services.

Fourth, infrastructure planning must account for quantum integration. Data centres under design today should include provisions for future quantum accelerator integration, vibration isolation, electromagnetic shielding, cryogenic cooling capacity for superconducting systems, or room-temperature capabilities for photonic approaches. Quantum computing won't replace classical infrastructure but will complement it as specialized accelerators for specific workloads, much as GPUs revolutionized deep learning without eliminating CPUs. Forward-looking data centre operators are already designing "quantum pods" - dedicated sections with the specialized infrastructure quantum systems require.

Quantum-Biology Convergence: When Physics Meets Life and AI

The Dawn of Hybrid Intelligence

The quantum-AI convergence represents far more than incremental technological progress, it signifies a fundamental shift in how we conceptualize computation, intelligence, and the relationship between physics and biology. When quantum systems can reduce 20-million-year learning tasks to 15 minutes, when biological cells can be engineered into quantum sensors, when optimization problems intractable for classical supercomputers become routine for quantum annealers, we are witnessing the emergence of capabilities that transcend the classical paradigm entirely.

The massive AI data centres proliferating across continents may represent the last generation of purely classical computing infrastructure at this scale. Quantum-hybrid architectures promise equivalent or superior AI capabilities with dramatically lower energy consumption, fundamentally altering the economics of intelligence at scale. This doesn't mean quantum computing will render AI obsolete, quite the opposite. Quantum computing will supercharge AI, eliminate its most profound computational bottlenecks, and enable applications currently impossible on any classical system. But it does suggest that the trajectory of AI development is approaching a discontinuity, a phase transition from classical to quantum-enhanced intelligence that will redefine what's computationally achievable.

As quantum computing capabilities mature from laboratory demonstrations to commercial services over the next five years, organizations that prepared for this transition will thrive while those that dismissed quantum as perpetually distant will struggle to catch up. The quantum-AI era isn't arriving in some distant future - it's materializing now, breakthrough by breakthrough, qubit by qubit, at a pace that may soon make even today's most advanced AI systems look like child's play.

The relationship between technology and biology is dissolving as researchers engineer biological qubits, discover natural quantum effects in living systems, and build bio-computers solving optimization problems through quantum processes evolved over billions of years. We stand at the threshold of a unified science where quantum physics, artificial intelligence, and molecular biology converge into hybrid intelligent systems that operate at the intersection of the physical, computational, and biological - a convergence that may ultimately reveal that intelligence itself is fundamentally quantum in nature, and that our classical AI systems are merely approximating the quantum intelligence that life has exploited all along.

References

Quantum Computing Breakthroughs

Alice & Bob – Press Release: “Surpasses Bit‑Flip Stability Record” (24 Sep 2025)

Alice & Bob – Blog: “A Cat Qubit That Jumps Every Hour” (24 Sep 2025)

Forbes – “Massive Quantum Computing Breakthrough: Long‑Lived Qubits” (25 Sep 2025)

Alice & Bob – “Beyond Moore: Harnessing Quantum for the Next Era of HPC” (14 Sep 2025)

Proven Quantum Learning Advantage (Technical University of Denmark)

Biological Qubits and Quantum‑Biology Integration

University of Chicago PME News – “Scientists Program Cells to Create Biological Qubit” (31 Jul 2025)

Quantum Machine Learning and Optimization

MOR Software – “Quantum Machine Learning: The Complete Guide for 2025”

arXiv – “Comparing Quantum Annealing and BF‑DCQO” (16 Sep 2025)

Nature – “Annealing Quantum Computing’s Long‑Term Future” (2025)

Protein Folding and Drug Discovery (Chemical & Life Science Quantum Use Cases)

D‑Wave Quantum Annealing and Supremacy

AI Data Center Energy Consumption and Quantum Impact

MIT Technology Review – “We Did the Math on AI’s Energy Footprint” (2025)

Data Center Frontier – “8 Trends That Will Shape the Data Center Industry in 2025” (5 Jan 2025)

Enlit World – “Quantum AI Framework to Reduce Data Centre Energy Consumption” (2025)

Photonic Quantum Processors and Hybrid Systems

Quantum AI Data Centers and Infrastructure

Antara News – “Indonesia to Host Asia’s First Quantum AI Data Center” (8 Jul 2025)

Data Center Dynamics – “Inside a Quantum Data Center” (2025)

Unconditional Quantum Supremacy and Hardware Scaling

UT Austin & Quantinuum – “Researchers Report Unconditional Quantum Supremacy” (19 Sep 2025)

New Scientist – “Quantum Computers Have Finally Achieved Unconditional Supremacy” (19 Sep 2025)

Post‑Quantum Cryptography and Security Transition

TokenRing – “Building Unbreakable Post‑Quantum Security” (2 Oct 2025)

IBM Institute for Business Value – “Secure the Post‑Quantum Future” (2 Oct 2025)

Business Today Malaysia – “Global Partnership Launches Cryptography‑as‑a‑Service” (18 Oct 2025)

Sogeti Labs – “AI Meets Quantum Cryptography: Securing the Future of Intelligence” (20 Aug 2025)

Palo Alto Networks – “AI, Quantum Computing and Other Emerging Risks” (16 Oct 2025)

Quantum Biology and Bio‑Computing

Quantum.Bio – “International Conference on Quantum Technology in the Life Sciences” (9 Oct 2025)

The Quantum Insider – “What’s Quantum Biology? A Research Pioneer Shares His Vision” (28 May 2025)

Technology Convergence and Forward Roadmaps

World Economic Forum – “Technology Convergence Report 2025” (7 Oct 2025)

Bain & Company – “Quantum Computing Moves from Theoretical to Inevitable” (22 Sep 2025)

Sectigo – “Quantum Computing Timeline and Adoption Milestones” (13 May 2025)

Intro to Quantum – “The Timelines: When Can We Expect Useful Quantum Computers?” (23 Jun 2025)

Forbes – Bernard Marr: “The Critical Quantum Timeline” (10 Apr 2025)

Hybrid Quantum‑Classical Architectures

ICTP China Science – “Hybrid Quantum‑Classical Computing Architecture” (24 Jan 2025)

UChicago Voices – “Hybrid Quantum‑Classical Methods” (19 May 2025)

Journal of ICT & Energy Science – “Hybrid Optimization for Energy‑Efficient Computing” (31 Jul 2025)

Poznan Supercomputing Center – Hybrid Quantum HPC Press Release (2025)

Quantum Optimization and Neural Networks

ScienceDirect – “Quantum Neural Network‑based Approach for Optimizing AI” (Feb 2025)

TandF Online – “Quantum Neural Network Optimization” (26 Feb 2025)

PubMed – “Quantum Computing in Drug Discovery Techniques” (24 Aug 2025)

Quantum‑Enhanced Drug Discovery and AI Integration

Quantum AI and SEO Impact (Algorithmic Evolution)

LinkedIn – “Future of SEO: How Quantum Computing and AI Are Transforming Search” (26 Apr 2025)

SEO Quartz – “Quantum SEO: The Future of Google & AI Ranking” (23 Jul 2025)

ThatWare – “How Adiabatic Quantum Algorithms Are Redefining SEO” (15 Oct 2025)

AI Training Speed and Efficiency Comparisons

Commercial and Investment Perspectives

Viva Technology – “How Quantum Computing Will Impact AI in the Next 10 Years” (31 Jul 2025)

Investor View – “Quantum Computing Is Underestimated and Likely to Commercialize Soon” (16 Mar 2025)

Additional Context and Technical Analyses

Quantum Zeitgeist – “Alice & Bob Cat Qubits Achieve Hour‑Long Bit‑Flip Resistance” (28 Sep 2025)

Quantum Insider – “Top Quantum Computing Companies in 2025” (22 Sep 2025)

Software House Australia – “How Quantum Computing Will Influence AI Development” (11 Sep 2025)

Quantum Computing Report – “Can Quantum Computers Address the AI Energy Problem?” (27 Mar 2025)

Quantum Zeitgeist – Analysis of Quantum Supremacy Validation (25 Sep 2025)

Wall Street Journal – “Data Center Power Crunch” (2024 Referenced Context)

Physics and Mathematics Foundations (Primary Literature)

Nature Communications Physics – “Photonics for Sustainable AI” (2025)

Nature – “Quantum Computing Accelerates KRAS Inhibitor Design” (13 May 2025)

ScienceDirect – “Hybrid Quantum‑Classical Quantum Convolutional Neural Networks” (2025)

ACM Digital Library – “Designing Quantum Annealing for Optimization” (2025)

Springer – “Drug Discovery Using Variational Quantum Eigensolvers” (2025)

Policy and Industry Frameworks

World Economic Forum – “Quantum Ethics & Governance Guidelines” (2025)

NTT Data & Fortanix – “Global Partnership for PQC Security” (13 Oct 2025)

Quantum AI Industrial Applications and Scaling

SpinQuanta – “Top 9 Quantum Computing Applications in Key Industries” (22 Jan 2025)

Edge AI Vision Report – “Could Optical Computing Solve AI’s Power Demands?” (Sep 2024)

Technical Reviews and Education Resources

MeetiQM – “Quantum AI: The Future of Computing or Just Hype?” (31 Jul 2025)

Space Insider – “Quantum‑Classical Hybrid Computing Proposed for Space Missions” (26 Feb 2025)

Nature – “Quantum Optimization for Combinatorial Problems” (2025)

ScienceDirect – “Adaptive Hybrid Quantum‑Classical Computing Framework” (2025)

Quantum Insider – “Quantum AI Could Cut Home Energy Consumption” (1 Oct 2025)

Industry Forecasts and Reports

ABI Research – “Data Center Energy Consumption Forecast 2024‑2030” (10 Mar 2025)

DataCenters.com – “Quantum Computing: The Next Data Center Revolution?” (1 Sep 2025)

The Quantum Insider – “Quantum AI in 2025: Hype vs Reality” (13 Oct 2025)

Scientific and Economic Contextual Works

Science – “Quantum Learning Advantage on a Scalable Photonic Platform” (25 Sep 2025)

Nature Scientific Reports – “Quantum Annealing for Combinatorial Optimization” (2025)

Phys.org – “Scientists Finally Prove Quantum Computers Outperform Classical” (29 Sep 2025)

Science.org – “IBM Casts Doubt on Google’s Claims of Quantum Supremacy” (22 Oct 2019)

Biological and AI Cross‑Domain Frontiers

These 97 references collectively encompass the empirical studies, industry reports, and corporate disclosures.